Shauna Leonard recently spoke at the Women in Techmakers conference at Queen’s University Belfast. Her talk looked at the Semantic Analysis of Free Text and how it applies to her work with a UK government organisation. Read Shauna’s talk below.

What is Semantic Analysis of Free Text?

It is the process of drawing meaning from text. It is not just about finding the meaning of a single word, but the relationships between multiple words in a sentence. Computers can be used to understand and interpret short sentences to whole documents by analysing the structure to identify this context between the words.

Why is this necessary?

Why do we need to find meaning from particular words and the relationships between them? Because language is complex. Even humans don’t understand context unless its provided.

Consider the word “orange”. In one context, it can mean a colour. In another context, it could be a fruit. It could even be a county in California! Or even the word “date” can have different meanings depending on the situation. It could mean a day of the year, the fruit or an evening out.

Why is it relevant?

Since 2018 Datactics has Since 2018 Datactics has been working with a large UK government organisation to develop a semantic analysis process that automates decision-making when identifying crime using regular expressions.

The gradual development of the knife crime process, which is the first crime type we started with, has now resulted in a proven methodology that is repeatable for other crime types and extendible to other data domains.

The Problem: Classifying Crime

The quality of crime data is critical for accurate reporting. For example, in England and Wales, police forces report their crime figures on a monthly/ quarterly/ bi-annual/ annual basis. Fulfilling the reporting requirement means an analyst must manually search through 8 different fields looking for the world ‘knife’, working out at roughly 36 days work a year. However, one of the challenges is that there can be a lot of misreported figures in terms of the total number of a particular crime.

This can be down to simple human error. For instance, when manually inputting crime data into police systems, or at the point of crime, due to free text descriptions with non-standard content. Typos can occur such as “knif”, “knifes”, “nife”, so when doing an exact search for the word “knife” these misspellings can be missed.

What’s the traditional way of solving the problem?

Traditional methods are manual review. A user will manually read through every record in the data set and determine the classification for that record. With thousands of records to review, this can take days to complete, but will have a much higher accuracy.

An automated count of all the knife keywords is much faster but can be less accurate. The record may be flagged as a knife crime, but it doesn’t meet the official guidance and so should not be counted in the final statistics.

While the manual method is more accurate than an automated count of all the records where a knife is present, it can take a long time to complete and is reliant on an analyst with subject matter expertise. If that analyst is sick or on leave, it leaves the risk that this review won’t be carried out.

Datactics Solution

We conduct a 4 step methodology, making use of regular expression to improve accurate classification of crimes.

Step 1: Cleansing, Filtering and Indexing

First, we cleanse, filter and index the data. In addition to standard cleansing, formatting and validation of data, part of semantic analysis involves the important task of determining a working candidate set of records that are relevant for the semantic analysis process. Filtering out irrelevant records will save time by avoiding unnecessary processing later.

For crime classification this involves filtering based on valid crime codes, record statuses and, most importantly, interrogation of the free text for key words and phrases that indicate potentially relevant content.

Step 2: Text Standardisation and Assigning Labels

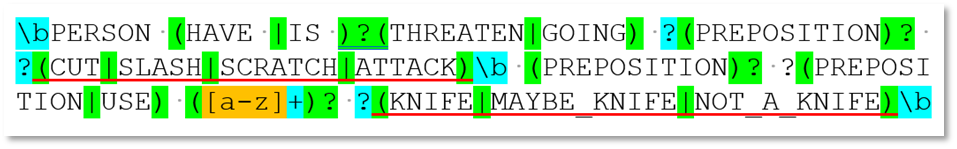

This is the most important step. By making use of regular expressions, the English language (including verbs, people, sharp intruments, prepositions) can be standardised to its simplest form. This includes misspellings.

- Lists of past and future verbs will get standardised to the simple present tense e.g., STABBING, STABBED, STABS > STAB.

- A list of all nouns and variations of individuals will get standardised to PERSON. E.g., Suspect, Friends, Stranger, Victim > PERSON.

- A list of all the different types of knives will get standardised to the word KNIFE. This includes typos. E.g., BLADE, KNIVES, CLEEVER > KNIFE.

The dictionaries make extensive use of negative/positive lookaheads/lookbehinds and capture groups and need to effectively cover all possible permutations of relevant words and phrases.

Using capture groups can identify the relevant verb or bladed instrument and generate and assign specific labels to the unlabelled data.

Step 3: Classification of high confidence, low confidence and rejected records.

This involves analysing the labels generated in the previous step, and automatically categorising into the three classifications, which are ‘Confirmed High Confidence’, ‘Confirmed Rejected’, or be flagged as ‘Low Confidence’ which requires manual review by the user.

Step 4: Consolidation of results and generating reports.

Metrics reports and various other reports are then generated for stakeholders.

Summary

So to summarise, semantic analysis of text involves three key steps:

- Using dictionaries of terminology to identify and standardise relevant text items into standardised “ingredients”

- Using regular expressions to generate labels based on the standardised word and phrase “ingredients”

- Classifying those labels as high/low/rejected or whatever alternative is appropriate

Effective semantic analysis of free text requires extensive and comprehensive dictionaries of relevant terminology – the good news is that the benefit is cumulative! We can reuse the dictionaries we’ve already created for other crime types. We’ve already got the list of verbs, and this can be added to with new terminology of different crime types, or new and changing slang across the nation.

Benefits of Semantic Analysis

There are still some limitations of this approach. It is a very manual process, where the dictionaries are built up over time by a data engineer. For the knife crime process, it took months of manual reading thousands of records with my colleague to build up the dictionaries, and constantly refining. Also, it leverages a lot of local subject matter expertise, which while useful clearly puts additional strain on already over-stretched resources. It is very much short -term pain for long-term gain, however.

- Reduced Manual Effort – Over 90% of manually-identified records are now flagged as ‘high-confidence’ automatically. The user doesn’t need to look at them. The data quality process is now automated, reducing time from days to minutes. Our process runs at approximately 8 minutes with very high accuracy.

- Data standardisation – Data checks are now consistent with Government standards across police forces.

- Consistent categorisation – Improved understanding and reporting of crime. Increased accuracy and consistency of reporting across forces, without relying on flag counting.

- Reduced Psychological impact – Automation reduces the time staff are required to spend analysing distressing records.